In the dynamic landscape of artificial intelligence (AI) and edge computing, advancements are happening at a rapid pace. With continuous improvements, the demand for highly accurate and instantly responsive AI, especially in applications like self-driving cars and factory robots, has surged. However, many AI systems pose a challenge for smaller edge devices, such as phones or sensors, due to their complexity.

Real-time Edge AI with Model Quantization, Its like game changing and breaking all the barriers in real time. This innovative approach compresses large AI systems, enabling them to efficiently operate on edge devices. This development is crucial, as it facilitates real-time AI functionality even with constrained power and memory resources. Imagine AI processes happening in mere seconds, eliminating the lag associated with cloud-dependent systems. Explore how model quantization is transforming the AI landscape, making instant and efficient AI deployment a reality at the edge.

Why Edge AI Matters: Faster, Safer, and Cheaper!

Bringing AI to the edge is a big deal – it puts AI right where the action is, like in our phones, self-checkouts, and smartwatches. This means crazy fast reactions with no delays, plus added security and privacy by keeping data separate. It also saves money by avoiding huge cloud bills for central storage and processing.

Edge AI has the potential to revolutionize self-driving cars, robotics in manufacturing, and health monitoring with wearables. These applications need quick decisions from AI, but only if the systems are fast and compact enough.

That’s where techniques like model quantization come in. They’re super hype right now because they let powerful AI run even on less powerful processors at the edge. It bridges the gap between advanced AI and fitting them into devices like our phones. In simple terms, quantization brings speedy intelligence to the edge, making edge AI finally work properly in real-time! It’s exciting stuff – it’s going to change everything, for real.

How To Make Edge AI Possible With Model Quantization.

For seamless operation of edge AI, efficiency is key. These systems must deliver lightning-fast performance on compact devices like phones without compromising smarts or accuracy. However, the challenge emerges as AI models grow in complexity, often reaching billions of settings that strain the capabilities of phones and tiny sensors.

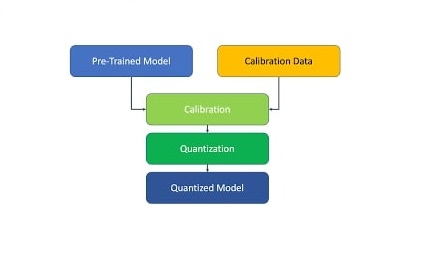

Enter the solution: model quantization. A superhero in the AI world, it comes to the rescue by shrinking down models through reduced number precision. Instead of hefty 32-bit floats consuming significant space, quantization transforms them into efficient 8-bit ints, instantly slashing the size by 4x. Now, mini AI models effortlessly integrate into small edge devices.

There are several strategies to compress models like this. Developers can fine-tune settings based on deployment requirements, whether for phones or sensors, considering available processing power, and more. The key lies in striking the perfect balance between real-time responsiveness and retaining essential intelligence. When harnessed effectively, quantization empowers edge devices with ample AI brainpower to perform magical feats.

How Will Edge AI Change the Game.!

Get ready for Edge AI to become an integral part of our daily lives – from smart cameras scanning surroundings and health bracelets analyzing vital signals to inventory sensors in stores and self-driving vehicles. The possibilities are limitless! These applications demand on-the-spot AI processing, eliminating the need to rely on cloud connections.

According to IDC, an estimated $317 billion will be invested in edge computing tech by 2028 – a staggering figure. As organizations transition to edge AI and prioritize local data processing, the need for robust platforms becomes paramount. These specialized stacks ensure efficient model execution while maintaining data security and privacy by keeping it local.

With edge devices generating diverse formats of data through videos and various sensors, the platforms must swiftly process this information for AI systems. A unified organization is key to seamlessly integrating advanced AI with the plethora of data streaming from the edge. This is the magic behind unlocking real-time capabilities, making Edge AI a true game-changer.

Where Is Edge AI Heading To .......

As we usher in an era of smarter devices and edge sensors, the integration of AI and specialized data platforms becomes paramount for achieving blazing-fast, secure, and real-time processing. Companies must prioritize building robust systems capable of meeting the unique demands of the edge, where the future is all about speed!

At the heart of this transformation lies model quantization – the secret sauce enabling heavyweight AI on compact edge hardware with limited resources. Leveraging cutting-edge techniques like GPTQ, LoRA, and QLoRA, we can now deploy advanced AI models locally without overwhelming puny edge devices. This paradigm shift brings forth incredible benefits, including rapid reactions, cost efficiency, data privacy, and independence from colossal central servers.

Edge AI is poised to revolutionize diverse industries, from self-driving vehicles and IoT sensors to health tech and beyond, with endless applications yet to be explored. As this space evolves with innovative solutions, the synergy of edge AI and quantization will redefine the possibilities, pushing the boundaries of real-time intelligence at the extreme edge.